Note: Warr, Oster, and Mishra are at it again with a shared blog post. First (really terrible) draft by Punya, which was cleaned up by ChatGPT and then went through cycles of editing by all of us.

Note: An addendum written after Apple’s announcement of its own AI initiatives was added, at the end of this post, on June 13, 2024. The title of this post was also edited to reflect this change.

Here we go again.

Turns out that Google’s new AI search feature is acting up. It told some people that “Astronauts have met cats on the moon, played with them, and provided care” (which inspired the image above) or that rocks are a good source of nutrients.

Arghhhh….

As we had written just a few days ago: Why are we surprised? Hallucinations, bias and the need for teaching with and about genAI

To summarize, we argued that generative AI HAS to hallucinate. We wrote that “hallucination is all they do. That’s it. Making stuff up is their modus operandi, and they do so word by statistically generated word.”

What IS (or should be) surprising is that this crazy predictive process leads (quite often) to incredibly coherent and amazing outputs.

But despite being trained by humans through reinforcement learning from human feedback and despite all the guardrails built to control its wayward nature, their true essence remains unpredictable. Not accepting or recognizing this leads to the kind of headlines (and surprises) we keep reading about.

Recognizing this fact (that hallucinations are built into its very nature) has significant implications for how we use these technologies, especially in education. Thinking of genAI as a truth engine is misguided because these technologies have no sense of truth. They have no ability to develop their “knowledge” from the true, sensory-laden and complex world: everything simply comes from associations in their training data. They are, in the philosophical sense that Harry Frankfurt meant it, bullshit artists. They embody, quite literally, the postmodern idea that all we have are texts that connect to other texts. (In this context we use text as being any representation, not just words, but images, video, diagrams, and more.)

Now this is a challenge for companies like OpenAI, Google, Meta and Microsoft who have bet their future on this technology. They know that there is no way these hallucinations can be entirely removed. Thus, they must find a way to ameliorate this problem by how they present their products. And each of these companies has selected its own strategy for doing so.

The company most challenged by this fact is Google, whose identity is built around giving us the right answer. Search (accurate and fast) is Google’s reason for existing as a company. Thus their approach to hallucination (akin to Perplexity) is to give us a synopsis and point us to sources that led to that synopsis, knowing that their synopsis could be wrong. Their hope is that over time, they will minimize these errors (though they also know that they will never really go away). There are those who argue that this is bringing Google back to what it was meant to be as a company – though it is unclear if that is truly happening.

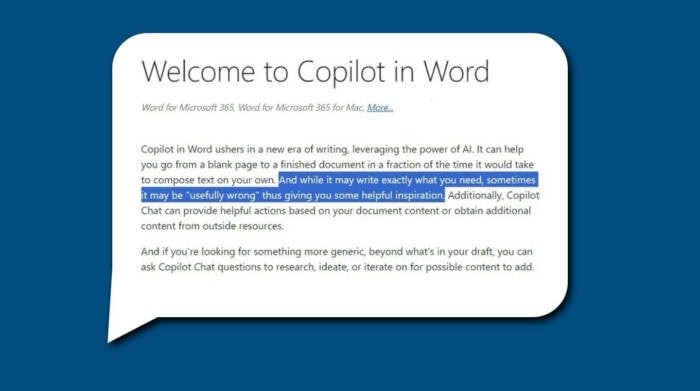

Microsoft, on the other hand, is an enterprise technology company – their goal is to help us do our work faster and better. It is no surprise that they have gone the copilot route, serving as coaches and assistants—think Clippy on steroids (may he Rest in Peace). Even the name “Cpilot” suggests it is meant to help us accomplish tasks—land the metaphorical plane.

So, the right answer for Microsoft is to emphasize that it is useful, even if sometimes it may get things wrong. This is why their introduction to Copilot in MS Word states that “sometimes it may be ‘usefully wrong’ thus giving you some helpful inspiration.” This is their way of acknowledging that Copilot will get things wrong – but can act as an inspiration.

Though we must add that a copilot getting things “usefully wrong” does not sound very reassuring. Maybe they should have called it a “smart, occasionally drunk, intern.” We are sure Punya would have willingly given up any IP claims for the bragging rights!

Meta took a different approach. Instead of focusing on accuracy or task completion, the powers behind Instagram and Facebook emphasized the seemingly relational capabilities of genAI. For instance, Meta has launched AI chatbots that allow users to interact with celebrity-like characters through WhatsApp, Messenger, and Instagram, although these bots adopt fictional personas rather than mimicking the celebrities’ real lives. The New York Times describes how Instagram is testing “Creator A.I.,” a program that uses artificial intelligence to enable influencers to interact with fans more efficiently by automating responses in their unique “voice” on the platform. This focus on celebrity or influencer personalities reflects the social media company’s valuing of social capital and their aim to augment creators’ capabilities to connect with increasingly large volumes of followers. While Meta may be motivated to make these interactions engaging and seemingly authentic, the focus on fictional personas may demonstrate Meta’s awareness that these tools are prone to hallucinate (though initial reports indicate that at this experiment is not playout as well as they had hoped).

Finally, OpenAI, the upstart. In some ways they are best placed to take advantage of this new technology, mainly because they don’t have legacies to uphold, brand identities to defend. They can be whatever this technology will allow them to be.

So the question really is, what does this technology want to be?

We have argued that to truly understand generative AI and our responses to it, we have no choice but to adopt an “intentional stance.” We are meaning-making, pattern-seeking creatures who read personalities into all kinds of interactive artifacts. Our stone-age brains have never before had conversational, dialogic technologies like these chatbots. These interactions activate our theory of mind, making us perceive intentionality even in simple text-based interfaces (let alone the multimodal interactive bots that OpenAI revealed in their latest demo). This is not in any way to suggest that these AI tools are sentient or anything even close to that. It is a cognitive illusion that emerges from deeply ingrained inferential principles that operate automatically. Generative AI’s conversational nature, engaging us through language, makes it easy and almost automatic to anthropomorphize these tools. Humans, being cognitive misers, instinctively interpret these technologies as psychological beings.

Essentially OpenAI is leaning into this deeply human proclivity, to see agency and intentionality in these tools. It is, in other words, seeking to harness our cognitive blind spots to create (as Ian Bogost in the Atlantic described it) “Our Self-Deceiving Future.”

It is not surprising, therefore, that OpenAI is focusing on giving the next versions of ChatGPT more personality and character. This is seen in the new voice models that have more inflections, emotion recognition, and expression. Perhaps it is related to why they allegedly chose to use Scarlet Johannson’s voice for one of the new models—it adds much more character to the assistant.

Which vision will win out is to be seen. And maybe there is space for all of these versions to co-exist.

That said, something all of these approaches have in common is an acknowledgment (albeit implicit) that these hallucinations are here to stay.

And irrespective of where things end up, it is best, in educational contexts, to see generative AI not as a truth engine but rather as a possibility engine. A creative cognitive partner whose hallucinations are the seed from which creative ideas can emerge.

Addendum (June 13, 2024): Apple recently announced its AI plans. What is interesting is how consistent their response is the broader theme that we discussed in this post. Apple’s response, with its focus on deep integration across applications, baking the technology into just about every device and application it offers. There is also an emphasis on privacy and security, and (most important) a serious concern on keeping you locked into its ecosystem. In addition, it is partnering with OpenAI BUT keeping it somewhat at “arms length”—going to it only for certain tasks but with user permission. And these would exactly what we would have predicted given their past history.

The challenge of dealing with hallucinations doesn’t go away, however. As this Wired article describes it, Apple’s Biggest AI Challenge? Making It Behave, and I quote:

Apple … seems to understand that generative AI must be handled with care since the technology is notoriously data hungry and error prone. The company showed Apple Intelligence infusing its apps with new capabilities, including a more capable Siri voice assistant, a version of Mail that generates complex email responses, and Safari summarizing web information. The trick will be doing those things while minimizing hallucinations, potentially offensive content, and other classic pitfalls of generative AI—while also protecting user privacy.

The article in Wired, summarizes what we have been trying to say, namely the wayward nature of these technologies is not something we can wish away. The article concludes as follows:

… generative AI is definitionally unpredictable. Apple Intelligence may have behaved in testing, but there’s no way to account for every output once it’s unleashed on millions of iOS and macOS users. To live up to its WWDC promises, Apple will need to imbue AI with a feature no one else has yet managed. It needs to make it behave.

Getting genAI to “behave” is indeed the key challenge of all these companies.

0 Comments