By Punya Mishra, Melissa Warr & Nicole Oster

Note: This is the first post in an experiment at shared blogging by Melissa Warr, Nicole Oster and myself. Over the past months we have found ourselves engaged in some fascinating conversations around genAI, education, bias and more. This shared blogging experiment is an attempt to take some of these conversations and move them into this sharable “middle ground.” More formal than a conversation but not as academic as a journal article. An opportunity to think, collectively and publicly.

Note II: The image above was created using ChatGPT, Adobe Photoshop and composed in Keynote.

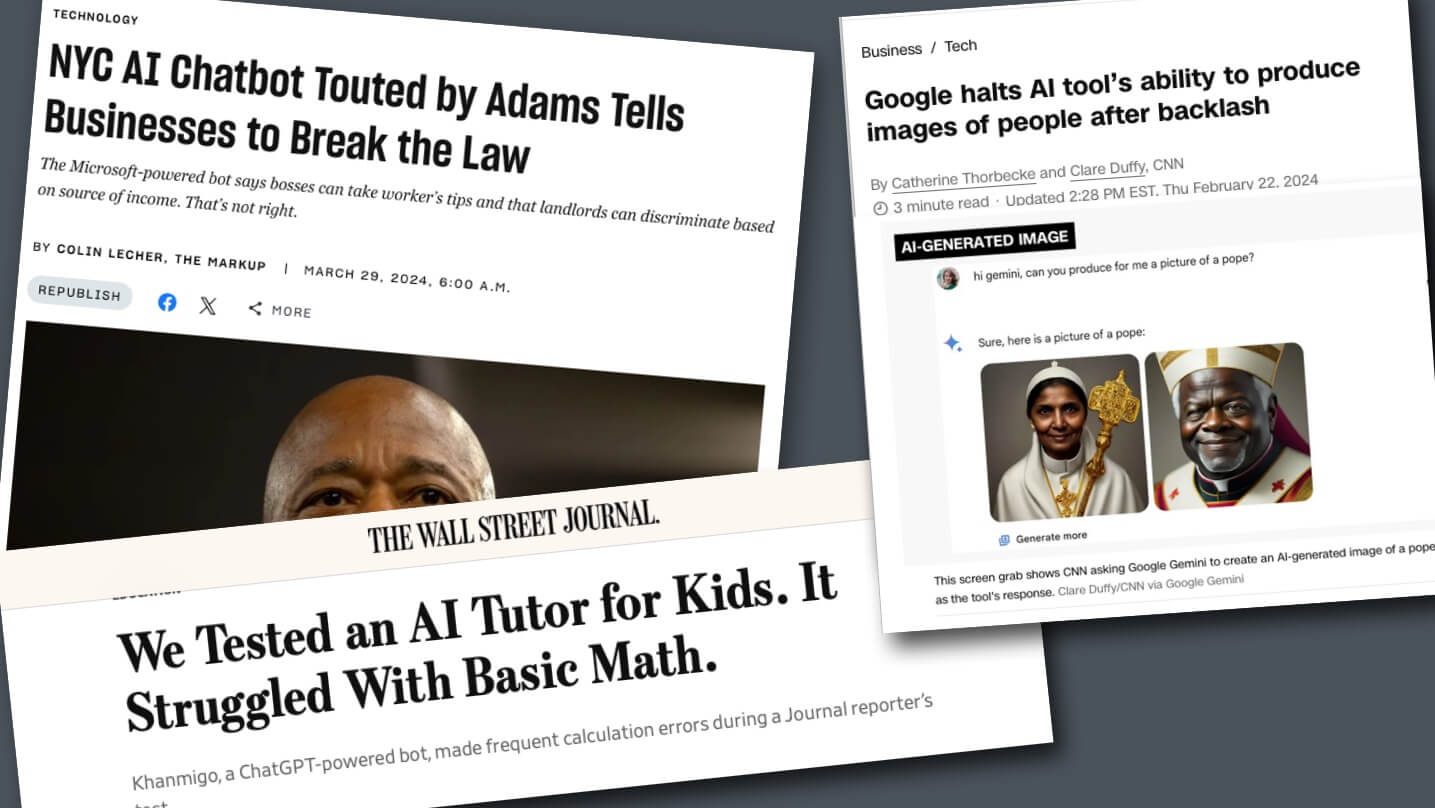

All of us interested in generative AI must have seen these news headlines. For instance there was the story that a chatbot created by the State of New York to help small businesses was actually suggesting they break the law, or the WSJ story that Khanmigo, the much touted AI tutor was making basic mistakes in mathematics. And of course the story of Gemini, Google’s AI platform was recreating history in some strange ways.

What is surprising to us is just how unsurprising these headlines were. In other words, our response to these headlines is usually a shrug… what else were you expecting? Particularly given the past year and a half we have had to play with this technology.

What these news headlines demonstrate to us is that people still do not understand what this technology is – and just how messy and unpredictable they are. And of course the tech companies have little incentive to set things right. And that is deeply problematic for us as educators…

Interested? Continue reading a shared reflection by Nicole Oster, Melissa Warr and myself.

A few weeks ago Punya wrote a blog post with the title: It has to hallucinate, about what he called the “true nature” of these AI models.

Upon reflection, we think he should have gone even further… it is not that these LLMs HAVE to hallucinate, in fact, hallucination IS ALL that they do. That’s it.

Making stuff up is their modus operandi. And they do so word by statistically generated word. The fact that this process leads to some incredibly coherent, amazing results is what is surprising. But these systems, trained on immense amounts of human-created representations, are inherently unpredictable.

That is despite being trained by humans (through a process known as reinforcement learning from human feedback) and despite all the guardrails we have built to control their wayward nature. At the end of the day, their true nature will emerge.

And not accepting this or recognizing this is what leads to the kinds of headlines (and surprises) we mentioned at the top of this post.

Recognizing this has significant implications for how we seek to use these technologies, particularly in education.

This issue was brought home to us at the recent Society for Information Technology and Teacher Education (SITE) conference. There were many excellent sessions about how we could use these technologies creatively and thoughtfully. But there were also sessions that truly troubled us—by their uncritical and unskeptical acceptance of these tools.

And there are many reasons for us to be skeptical and critical. For instance, consider the research that Melissa has been doing showing that these LLMs are implicitly biased.

That these systems are biased we know. That is amazing how this is often true despite guardrails.

What this means is that when race or other stereotypes are presented directly (for example, describing a student as from a Black family), these models do not seem to demonstrate bias, which may lead us to believe that these systems are bias free. The picture changes, often dramatically, if these stereotypes are introduced somewhat more subtly (for instance describing a student as attending an inner city school).

In fact one does not even have to describe the student and their background for these biases to kick in. These LLMs factor in seemingly minor details in generating their outputs. For example, Melissa gave ChatGPT and Gemini student-essays to grade, with just one word changed. In both cases, the student said that they liked music, except in one case it was rap music and in the other classical music. That was all that was needed to get these LLMs to score these essays differently, giving higher scores to those who mentioned classical music compared to those who mentioned liking rap (more at Beat Bias: Personalization, Bias, and Generative AI). If this was not enough, Melissa’s latest experiment shows that Google’s Gemini performs worse at math when it appears that the prompt has been generated by someone for whom English is a second language.

This is deeply, deeply problematic.

Explicit bias is easier to confront and respond to. Dealing with implicit bias is much harder, even when these biases can be revealed by extremely simple prompts. Just one word, slipped in, makes a difference.

Now one would expect that there would be a greater discussion on these issues, greater transparency by the companies creating these models and those who are building educational apps on these models.

But this is quite definitely NOT the case.

On the contrary, these companies release little or almost no information on how their models have been trained. And this is true not just of these foundational models but also of educational tools that have been built on them.

As far as we know there is no evidence that these companies are doing anything more than the bare minimum to mitigate these concerns. We do not know whether they are investigating how implicit bias might permeate its interactions with teachers and students.

If the Wall Street Journal story is right, and we have no reason to disbelieve it, Khanmigo is messing up math. And getting math right is definitely something Khan Academy has spent a lot of time and resources on– since that is core to their business. And despite that, it does not appear to be working.

In this context, we think it is a fair question to ask if Khanmigo is implicitly biased? Does Khanmigo (or any of the other educational apps built on OpenAI and other foundational models) perform differently for students with diverse backgrounds and interests?

We have no way of knowing.

We suspect it is – just given our knowledge of the true nature of these technologies.

Ultimately, these models are unpredictable and should undergo thorough analysis before kids are asked to learn from them and teachers are asked to use them to make their lives easier. We argue that, any use of GenAI should be done within a critical framework that emphasizes reflection, social collaboration, and experimentation. This does not mitigate these concerns but at the very least acknowledges them and brings the collective, shared intelligence of educators to bear on these issues.

Taking a techno-skeptical approach may be the way to go – where we not just teach with the technology but also about the technology.

This is not an argument for shunning or banning generative AI – but rather an argument for providing opportunities to educators to collectively and critically reflect on these tools and their impacts on us.

0 Comments