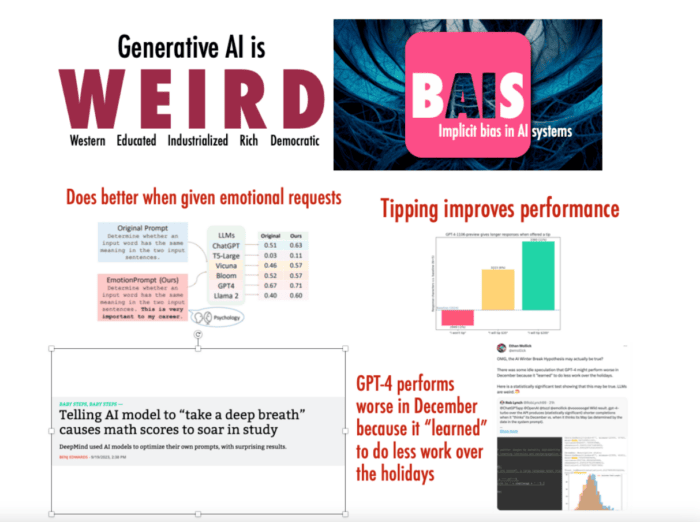

Note: This blog post was almost entirely written by ChagGPT based on an analysis of a set of images I had uploaded onto it. The image above (Weird AI) is an original typographic design created by me. The background sky was created by Adobe Firefly.

To give some context, I took six slides from the set I had used for a recent keynote presentation I gave at the AI Summit in Michigan. Essentially these slides were an extended riff about how Generative AI was weird, along multiple dimensions.

I then made one large image from these slides (see below) and asked ChatGPT to interpret these images and write a blog post for me. The exact prompt and GPT’s response is given below. GPT’s words are in blue and have been included as is, with no editing. I have also added some references to each of the points related to the slides.

The 6 slides that were uploaded to ChatGPT

Prompt: There are a set of 6 slides that I used in a recent presentation to make the point that Gen AI is weird. Can you take this set of six slides and come up with a nice descriptive blog post on this topic where each of these images would be used to make a point about the weird nature of gen AI

ChatGPT’s response

Creating a descriptive blog post based on the provided slides can be a great way to delve into the peculiarities of generative AI and the biases inherent in these systems. Here’s a structured outline for your blog post:

The Weird Quirks of Generative AI: A Look at Bias and Unpredictability

Generative AI has become a cornerstone of modern technology, from chatbots to content creation tools. However, as advanced as these systems are, they often exhibit behaviors and outputs that can be deemed as weird or unexpected. A recent presentation highlighted these oddities through a series of insightful slides.

Western, Educated, Industrialized, Rich, Democratic (WEIRD)

The acronym WEIRD captures the essence of generative AI’s primary dataset bias. These systems tend to reflect the biases of their training data, which disproportionately represents Western, Educated, Industrialized, Rich, and Democratic societies. This skew in perspective can lead to AI systems that perform better for users from these backgrounds while potentially alienating others.

Reference: Psychology is Weird [Link]

Emotionally Charged Requests

It appears that generative AI can sometimes perform better when prompted with emotional requests. This suggests that the way we interact with AI can affect its performance, possibly because emotional prompts are more detailed and provide better context for the AI to grasp the user’s intent.

Reference: Large language models can be enhanced by emotional stimuli (Arxiv article: Link)

The Concept of ‘Tipping’ AI

A curious phenomenon observed is the improvement in AI performance when the concept of ‘tipping’ is introduced. While not a literal monetary tip, this metaphorical tipping refers to providing extra information or incentives that seem to spur the AI to ‘try harder’ or ‘think deeper’, resulting in better output.

Reference: Tipping ChatGPT gives longer results (Twitter/X thread: Link)

Telling AI to “Take a Deep Breath”

Instructing an AI to ‘take a deep breath’ had an interesting outcome: a noticeable improvement in math scores in a study. This anthropomorphic instruction hints at the potential of using human-like encouragements to optimize AI performance, which is as fascinating as it is bizarre.

Reference: Telling AI model to “take a deep breath” causes math scores to soar in study (Ars Technica article: Link)

Seasonal Performance Variability

Interestingly, generative AI, specifically GPT-4, showed a dip in performance during December. This suggests that AI systems can ‘learn’ patterns from their interactions with humans, including the tendency to ‘wind down’ during holiday seasons. While this is likely a reflection of the training data, it showcases how AI can mirror human behaviors in unexpected ways.

Reference: Example of the December effect (i.e. GPT results are worse after December break). LINK

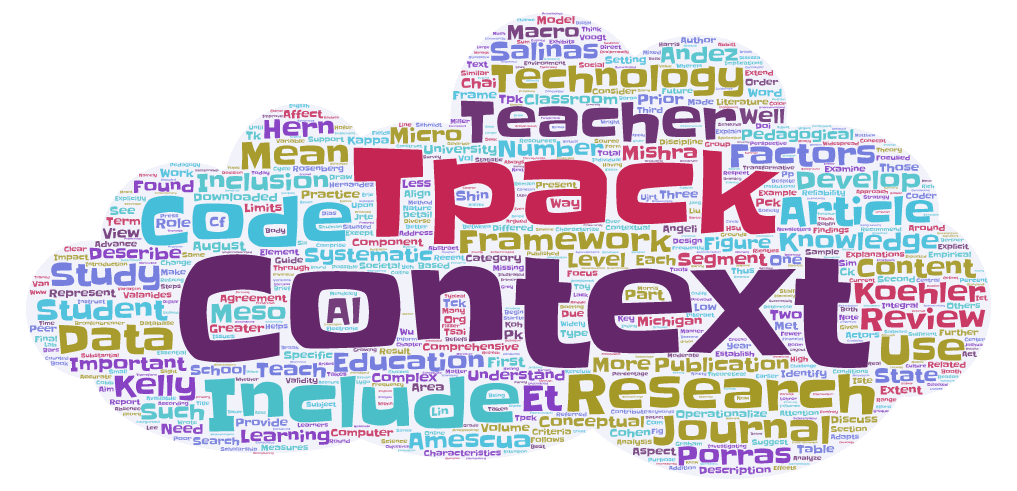

Implicit Bias in AI Systems

The final point of discussion is the implicit bias present in all AI systems. These biases can come from various sources, such as the data they’re trained on, the engineers who build them, and even the societal norms that sneak into their algorithms. Recognizing and addressing these biases is crucial for creating fair and equitable AI systems.

Reference: Warr, Melissa and Oster, Nicole Jakubczyk and Isaac, Roger, Implicit Bias in Large Language Models: Experimental Proof and Implications for Education (November 6, 2023). Available at SSRN: https://ssrn.com/abstract=4625078 or http://dx.doi.org/10.2139/ssrn.4625078

In conclusion, the presentation’s visuals offer a thought-provoking look at the oddities and biases of generative AI. These ‘weird’ behaviors underline the importance of understanding and improving the ways we build and interact with AI systems.

(Note: In your blog post, you would replace the placeholders like #image-1 with actual images from your slide deck.)

For any further assistance or to flesh out each section with more detail, feel free to reach out!

Not too bad!

What, I wonder is weirder, the fact that generative AI is weird in these specific ways, or that we take these capabilities for granted. None of this (uploading images and having GPT interpret and write a coherent piece of prose) was possible even six months ago. Let that fact sink in…

0 Comments