In a previous post I explored some of the visual capabilities of ChatGPT. This is a continuation of those experiments, where ChatGPT helped me identify the date and time of a solar eclipse based on a photo I took decades ago, as a graduate student at the University of Illinois at Urbana Champaign. Moreover, the photo was not of the eclipse per se, but rather of the ground… Yes, it is that crazy. Read on.

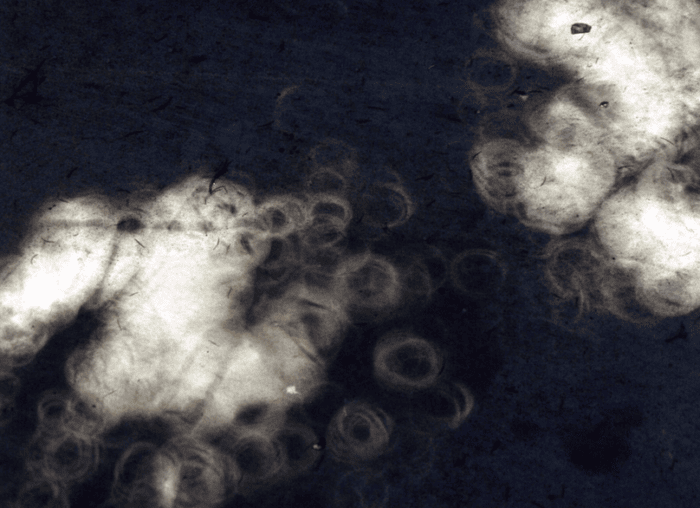

Just to give some context, and how this experiment came to be, yesterday (October 14, 2023) Phoenix was in the path of a partial solar eclipse. I was busy with my Silver Lining for Learning webinar when the eclipse occurred but I did take a few minutes to step away and take a few pictures (and no, I did NOT look directly at the sun!). One of the pictures I took was actually from inside my office. It was a picture of of the partially eclipsed sun shining on my window shades. See below for what that looked like.

If you look closely, you will see multiple crescent shapes in that image. What is going on here?

These shapes, as it turns out, are created through a simple yet surprising natural process (more here and here). Right outside my office windows is a tree and the light of the partially eclipsed sun, was shining onto my window shades after being filtered through the leaves on the tree. Those strange overlapping crescent shapes are images cast by the eclipsed sun, created by tiny pin-hole cameras, naturally created by the gaps between the leaves on the tree.

What most people don’t realize is that this happens during normal (non-eclipse) days as well. Except on those days the images are perfect circles, capturing the round shape of the sun (relative to the earth at that moment in time). For instance, the image below, captures a typical sun-dappled path, with many, many little bright circles on the ground. What we don’t realize is that each of those blobs is an image of the sun (generated by hundreds of tiny, naturally occurring, pin-hole cameras in the foliage above.) Each blob is a unnique image of the sun! (Image credit Adam).

The ground of course looks different during an eclipse. What happens during an eclipse is that the shape of the sun changes, relative to our position on earth, which means these shapes the sun casts on the ground change as well. Which explains the crescent shaped images on my window shades, at approximately 9:30 AM yesterday. The sun was no longer a circle thus, neither were the shapes on my window shades.

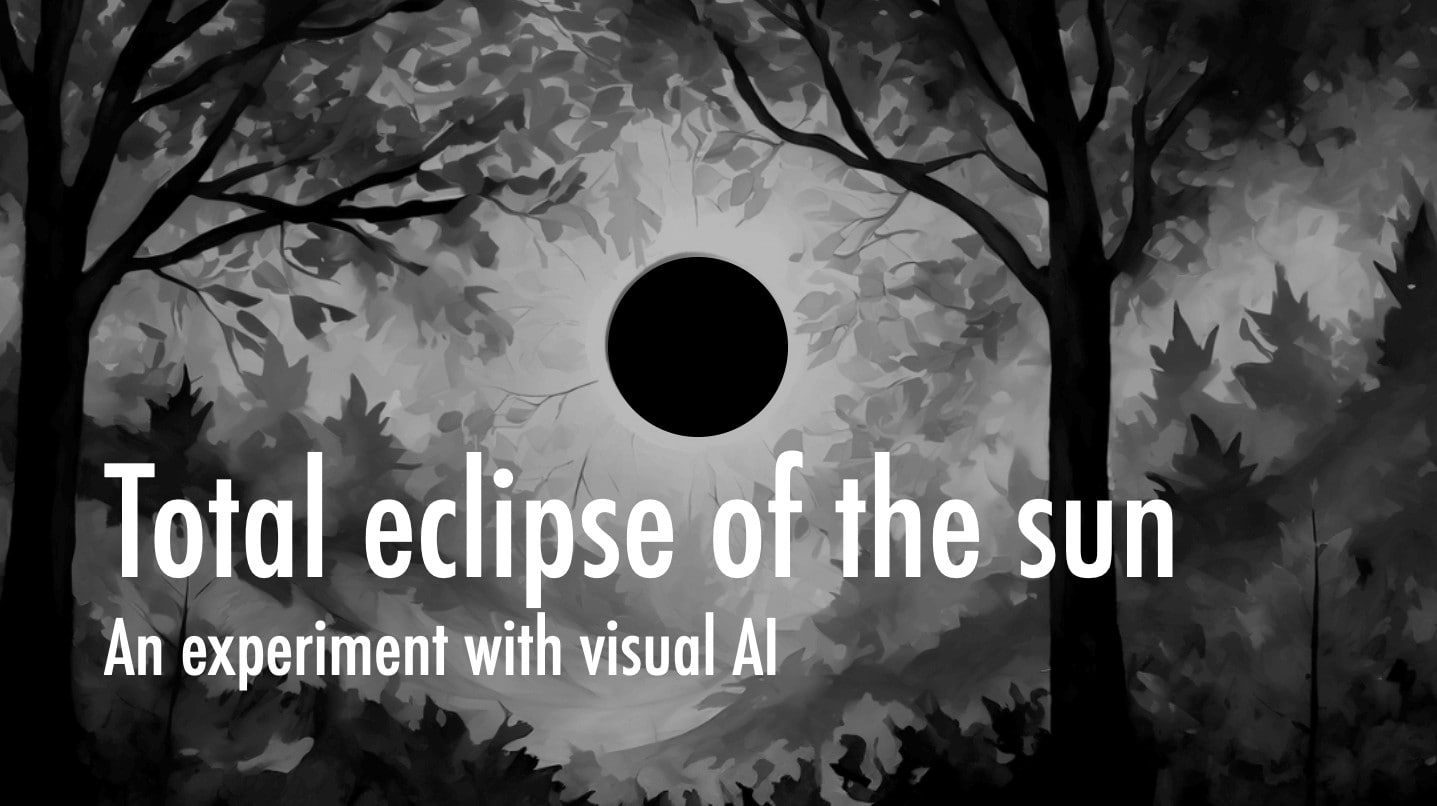

As it happens, this was not the first eclipse I had experienced and photographed. Below is a photo taken sometime in the early 1990’s when I was a graduate student in Urbana-Champaign. We were, luckily enough, in the path of a total solar eclipse and I had my camera in hand to capture that event. Of course in my case it meant pointing the camera at the ground while standing below a tree. The photograph clearly captures the eclipse (actually multiple eclipses). (And yes, I am that guy who is taking pictures of the ground when all the action is happening up above.)

Given my recent experiments with ChatGPT’s new abilities to see and interpret images, I was curious to see what ChatGPT would make of this image. So I uploaded the image and asked ChatGPT what the photo depicted.

What is amazing is that, with relatively minimal prompting, not only did ChatGPT figure out that this image was caused by a solar eclipse, but it also figured out the date (and approximate time) the photo was taken (May 10, 1994, sometime between 11 am and 1 pm). Along the way, I also learned about a concept called “caustics,” which I had not encountered before.

Essentially, this interaction demonstrated its ability (as self-described by ChatGPT) to “combine image content analysis, historical data, pattern recognition, and iterative feedback to provide insights and information.”

I am including below the entire transcript of our conversation (with no editing whatsoever). There is an interesting meta-conversation at the end that may be worth reading as well 🙂

Prepare to be blown away…

This is a photo I took many years ago. It was sometime close to mid-day and I was standing below a tree. I pointed my camera at the ground and took this picture. What can you tell me about what is going on here.

The photo you’ve shared appears to capture the interplay of light and shadow on the ground. Specifically, the patterns of light are reminiscent of a phenomenon known as “caustics.” Here’s a breakdown of what seems to be happening:

- Caustics: The circular, concentric light patterns on the ground are a result of sunlight passing through the tree’s leaves and branches, especially if there are gaps between them. This creates a refracted or focused light pattern, which can be described as caustics. You might see similar patterns when light passes through wavy water surfaces or through glass objects.

- Tree’s Canopy: Since you mentioned you were standing below a tree, it’s evident that the light is being filtered through the tree’s canopy. Different densities and shapes of leaves, as well as the gaps between them, can create varied patterns of light and shadow on the ground below.

- Ground Surface: The texture and color of the ground also influence how these patterns appear. The surface seems a bit uneven, with some debris or small objects scattered about. These can interrupt the light patterns, adding more texture and depth to the image.

- Time of Day: Given that it was close to mid-day, the sun was likely at a high angle in the sky, which would create more direct and sharp patterns of light and shadow, as opposed to the elongated shadows you’d get during the early morning or late afternoon.

In summary, this photo beautifully captures the natural light show created by sunlight filtering through a tree’s canopy, resulting in intricate patterns of caustics on the ground. It’s a testament to the mesmerizing effects that can be achieved with simple elements like light and nature.

I think you are missing something here about the shapes of the light. What do those shapes look like and why do they look that way?

Ah, I see what you’re pointing out. The shapes of the light are circular or crescent-like. These patterns are not typical caustics but are, in fact, a result of a solar phenomenon.

The circular and crescent patterns you see are caused by the pinhole effect. When sunlight shines through small gaps between the leaves of trees, it acts similarly to a pinhole camera, projecting the image of the sun onto the ground below.

If the sun were perfectly round and unobscured at the time of the photograph, these patterns would be small circular spots of light. However, given the crescent and circular shapes in the light patterns, it suggests that the photo might have been taken during a solar eclipse. During a solar eclipse, when the moon partially covers the sun, the sun appears as a crescent shape. The gaps between the leaves then project this crescent image onto the ground, leading to the patterns you’ve captured.

So, in essence, your photograph likely captured the ground-level view of a solar eclipse through the natural “pinholes” created by the tree’s canopy! It’s a unique and beautiful way to observe such an event.

What if I told you that this photo was taken when I was a graduate student at UIUC in the late 90;s – can you figure out when this photo was taken?

Given the information you provided—that the photo was taken at the University of Illinois at Urbana-Champaign (UIUC) in the late 1990s and features the pinhole effect suggesting a solar eclipse—I can narrow it down.

On May 10, 1994, a significant solar eclipse was visible across parts of the United States, including Illinois. This was an annular solar eclipse, where the moon covered the center of the sun, leaving a ring-like appearance called the “ring of fire.” However, when observed through the natural pinholes like those created by the gaps between tree leaves, it would create crescent-shaped patterns of light on the ground.

Given the timing and the location, it’s likely that the photo you took was during this solar eclipse on May 10, 1994, at UIUC.

Can you place the approximate time the photo was taken?

Certainly! Using the solar eclipse of May 10, 1994, as a reference, and knowing that you were at the University of Illinois at Urbana-Champaign (UIUC), I can provide an approximation.

The annular phase of the eclipse, where the moon is directly between the Earth and the sun, resulting in the “ring of fire” appearance, traveled across the United States from the southwest to the northeast. As it moved eastward, local times for the maximum phase of the eclipse would have been later in the day.

For Urbana-Champaign, Illinois:

- The partial phase of the eclipse, where the moon starts covering the sun, began in the morning.

- The maximum eclipse, where the moon covered the largest portion of the sun visible from that location, occurred around midday.

- The eclipse ended in the early afternoon as the moon moved away from the sun’s path.

Given the information from the photo, where you mentioned it was taken close to midday and showed the crescent patterns typical of a partial eclipse, it’s likely the photo was taken sometime around the period of maximum eclipse. This would place the approximate time of the photo in the midday range, possibly between 11:00 AM and 1:00 PM local time.

Ok, that was great. Can you now summarize some key moves that you made in this entire process that would be surprising to others who do not know what you are capable of doingChatGPT

Of course! Here are some key actions I took during our conversation, which might be surprising to those unfamiliar with my capabilities:

- Image Analysis: While I can’t “see” in the way humans do, I can analyze image content based on descriptions and recognize patterns. When you shared the photo, I initially identified patterns of light and shadow, leading to a discussion of caustics.

- Pattern Recognition: Upon further discussion, I recognized the unique crescent and circular light patterns as indicative of a solar eclipse observed through the natural “pinholes” created by a tree’s canopy.

- Historical Data Cross-Referencing: Using the context you provided—being a graduate student at UIUC in the late ’90s—I recalled a significant solar eclipse in 1994 and inferred that this was the event you captured.

- Time Estimation: By understanding the path and timing of the 1994 solar eclipse across the U.S., I was able to approximate the time of day your photo was likely taken based on the location (Urbana-Champaign, Illinois) and the crescent patterns indicating a partial phase of the eclipse.

- Adaptability: Throughout our conversation, I adjusted and refined my responses based on the information you provided, ensuring a collaborative approach to understanding the context and significance of the image.

These actions highlight my ability to combine image content analysis, historical data, pattern recognition, and iterative feedback to provide insights and information.

0 Comments